Going with the gut

We spend lots of time trying to get away from our irrational, biased intuition. But what if it’s right?

Human beings are famously fallible. We make all sorts of poor decisions, particularly in times of stress when we have no time to think properly. But we also screw up when we’re given all the time in the world to luxuriate over a problem. We fall prey to scams and illusions. We make decisions that don’t seem to benefit us. We hold on to mistaken beliefs long past the point that they’ve been proved wrong, doubling down on our mistakes. Our internal, unconscious biases lead us to make incorrect decisions that are objectively racist, sexist, classist, and every possible kind of -ist.

We make these mistakes when we’re too trusting of our guts – when we rely too heavily on our intuition, rather than thinking things through rationally. And they’re so common – and so costly – that a whole discipline, straddling psychology and economics, has emerged to study these irrational behaviours, cataloguing the cognitive biases and heuristics that so often get us into trouble.

Modern behavioural economics was pioneered in particular by the Israeli psychologists Daniel Kahneman and Amos Tversky. In his best-selling book Thinking, Fast and Slow, Kahneman contrasts “System 1” thinking – automatic, fast, prone to errors and biases – with the conscious, slower, less error-prone “System 2” thinking. Kahneman and Tversky’s work, and that of the behavioural economists who followed them, has tended to focus on the myriad ways that System 1 thinking fails us by taking mental shortcuts, making biased and incorrect decisions, and generally being irrational.

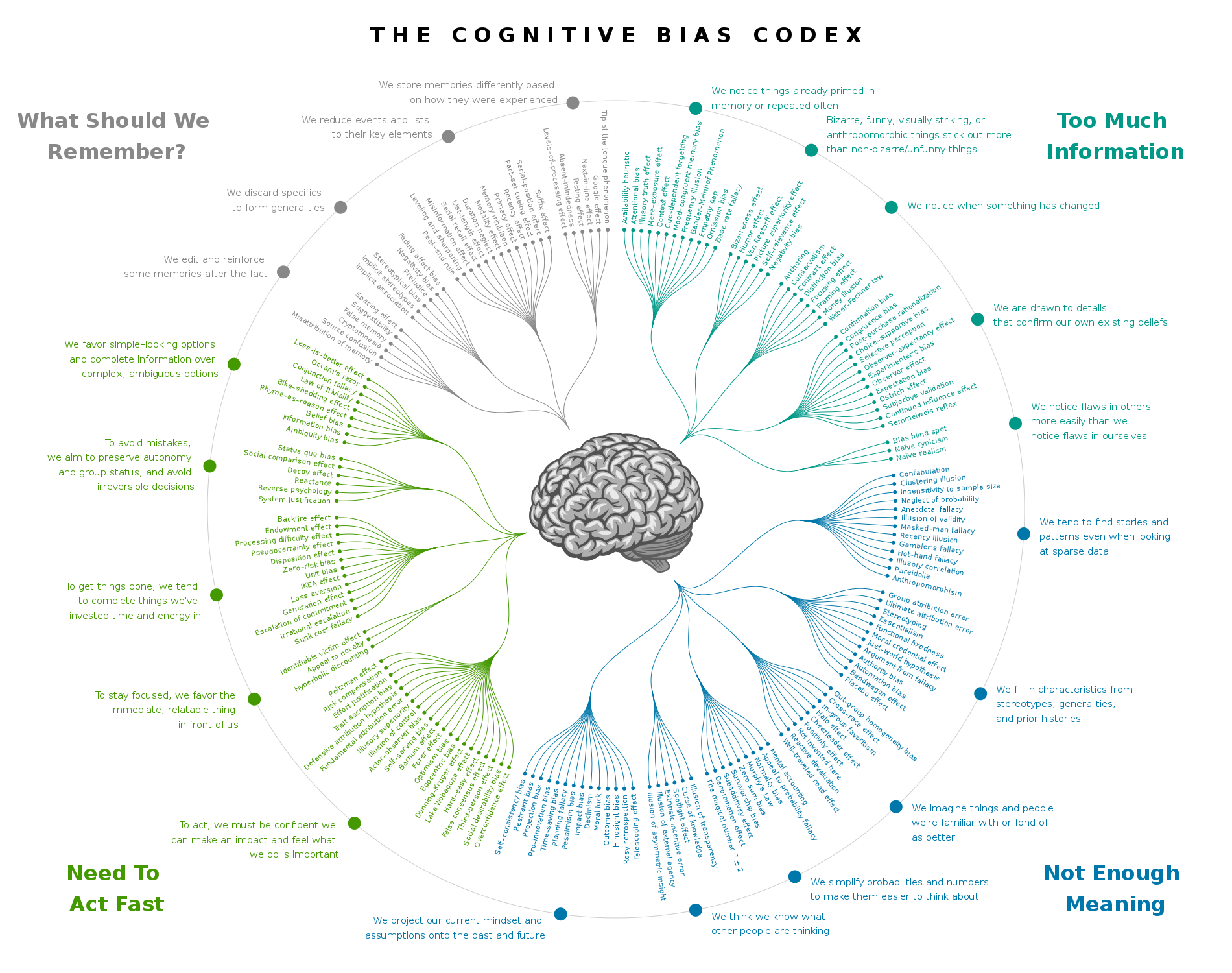

Since the 1970s, there has been an explosion of thinking on cognitive biases. Hundreds have been catalogued, and people go to great lengths to avoid them – hoping, in the process, to avoid behaving irrationally.

(Image: John Manoogian III, CC BY-SA 4.0)

It’s entirely right to try to get to the bottom of our unconscious biases. They’re at the route not just of countless individual errors, but of widespread societal problems, too. But there’s a slight problem with this line of study. The idea of defining “irrational” behaviour implies an objective “rational” behaviour against which to compare it. We can only say “that act was an irrational one” if we can also say “the rational thing to do would have been…”. But to do so objectively is patently absurd: people’s decisions are influenced by their thoughts, their beliefs, their preferences and their priorities; they’re subject to whims and fluctuations; they might make sense only to them, only in that moment. How could you ever sift through that and try to pick out even a glint of objective rationality?

What if, instead of trying to define the ever-moving target of objective reality, we stopped trying to hold up rationality as an ideal to be strived for at all? What if thoroughly thought-through, carefully computed analysis wasn’t always the best way to make decisions? What if our intuition has things to offer us that our conscious thoughts can’t match? What possibilities open up to us then?

At times when we’re particularly time-poor, or face information overload, rational analysis is impossible. We make snap judgements; we recognise patterns; we go with our gut. We don’t use complex algorithms, but instead prefer heuristics: rules of thumb and shortcuts, decision-making strategies that try to use only the most important information rather than considering everything.

The German psychologist Gerd Gigerenzer has catalogued many of these heuristics, characterising them as “fast and frugal”. They’re fast because they don’t take much time; and they’re frugal because they don’t take much cognitive energy.

In experiments with the American cognitive psychologist Daniel Goldstein, Gigerenzer gave students in both Germany and the United States pairs of city names and asked them to identify which city was larger by population. That’s a tough proposition: American students might never have heard of Bielefeld or Duisburg, and likewise Germans might well be ignorant of Eugene, Oregon or Aurora, Illinois.

Gigerenzer and Goldstein discovered something strange. German students scored better than American students when it came to American cities. But this wasn’t because they were somehow more knowledgeable about geography: American students scored better than the Germans did when it came to German cities, too.

It turns out that the students were using a simple heuristic, one that Gigerenzer and Goldstein called the recognition heuristic. If you’re given two cities, and you’ve heard of one but not the other, simply assume that the one you’ve heard of is the biggest. It makes sense: the more influential and famous a city is, the more likely you are to have heard of it; and bigger cities are generally more influential and famous than smaller ones. You’re likely to have heard of a higher proportion of the cities in your own country than one overseas, which makes the heuristic less useful and explains the variance in the students’ scores.

Students were guessing, but guesses are anything but random; this wasn’t like flipping a coin. Our brain makes things available to us when we guess, and in this case there’s a strong correlation between the qualities that make a city likely to be “front of mind” – offered up as a guess – and its population size. And it turns out that this correlation holds for many more qualities of many more objects than just the size of cities.

Heuristics can clearly be faster and more frugal than computation. But they can also be more accurate, too. Gigerenzer suggests that, often, “less is more”: having more information, more data, more branches in the decision-making tree actually makes for worse decisions, not better ones.

In 2006, Sascha Serwe and Christian Frings set about testing the recognition heuristic. They wondered if recognition could predict the outcomes of tennis matches – a far more dynamic environment than cities, whose relative sizes remain relatively constant over decades. Tennis players, on the other hand, rise and fall in reputation over a much shorter period. Two conclusions emerged. First, recognition explains most of the guesses made when people are asked to predict the outcome of a tennis match: they generally pick the player they’ve heard of. And second, this simple heuristic is more effective than the predictions of tennis experts:

“[The study] needed semi-ignorant people, ideally those who recognized about half of the contestants. Among others, they contacted German amateur tennis players, who indeed recognized on average only about half of the contestants in the 2004 Wimbledon Gentlemen’s Singles tennis tournament. Next, all Wimbledon players were ranked according to the number of participants who had heard of them. How well would this ‘collective recognition’ predict the winners of the matches? Recognition turned out to be a better predictor (72% correct) than the ATP Entry Ranking (66%), the ATP Champions Race (68%), and the seeding of the Wimbledon experts (69%).”

Tennis experts have access to much more information than the amateurs; they don’t just recognise the names of every player, but they have much more in-depth knowledge about their playing styles, their form, their historical performance. And this excess of information does nothing to help them make better decisions versus the intuition of amateurs.

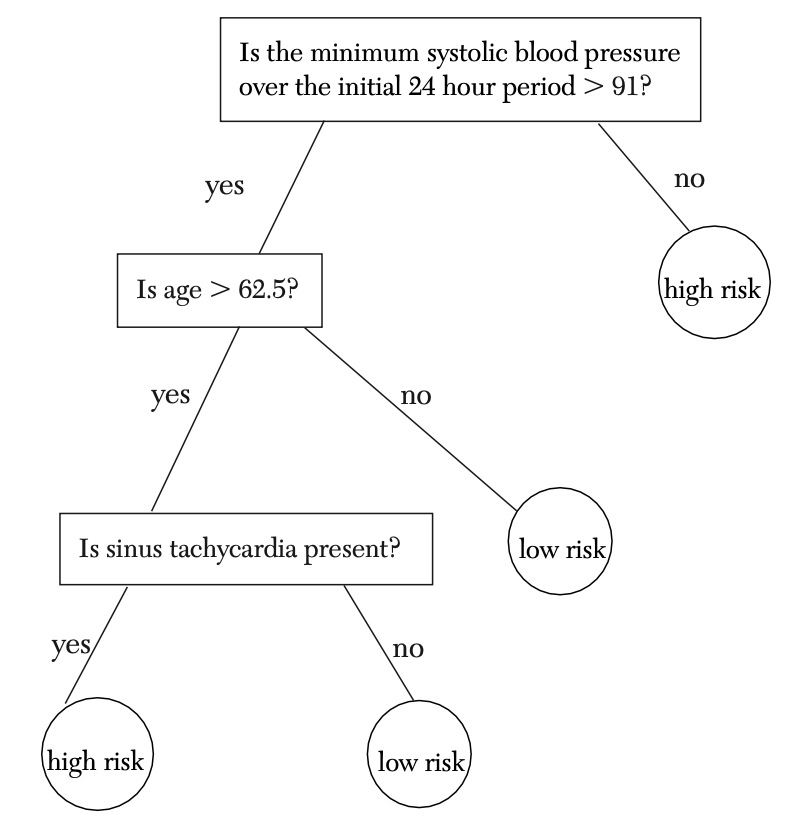

It’s not just guessing from the gut that outperforms experts with lots of data, though. We don’t just have to trust our subconscious; heuristics can be designed, too. In the 1990s, a team of researchers developed a simple decision tree for assessing patients who are suffering heart attacks:

The decision tree requires doctors to answer a maximum of three yes or no questions, which is certainly fast and frugal. Indeed, its simplicity suggests that the heuristic might be useful even if it wasn’t as accurate as other, more involved methods. And yet this method is actually more accurate than other, far more complicated statistical methods, allowing doctors to make effective decisions in practice. There are countless other examples, from many different domains, all categorised by Gigerenzer in his fast-and-frugal heuristics “toolbox”.

It’s perhaps not surprising to learn, then, that these fast and frugal heuristics are close to the behaviours exhibited by experts when making decisions in their day-to-day work – intuitive behaviours, distinct from but still a result of their formal training.

In 1993, the psychologists Beth Crandall and Karen Getchell-Reiter were studying nurses in a neonatal intensive care unit. They found that nurses were able to detect infections in babies even before the babies had blood tests, and without necessarily being to articulate exactly what they were seeing; they just had an intuition that the babies would develop sepsis. By probing the nurses and understanding what they were seeing, Crandall and Getchell-Reiter were able to identify new diagnostic clues that were previously unknown, creating new diagnostic criteria for infant sepsis that are used in nurses’ training to this day. These criteria weren’t rational or even conscious; they had emerged over time as the result of the nurses having observed thousands of patients.

The psychologist Gary Klein has made a career out of studying the behaviour and decision-making techniques of experts in their fields – from firefighters to chess-players, from Marines to nurses. In his studies of firefighter commanders who direct the operations on the ground at responses to fires, Klein found that, rather than weighing up many different options and carefully comparing all options, commanders went with their first intuitive response to a situation:

“The initial hypothesis was that commanders would restrict their analysis to only a pair of options, but that hypothesis proved to be incorrect. In fact, the commanders usually generated only a single option, and that was all they needed. They could draw on the repertoire of patterns that they had compiled during more than a decade of both real and virtual experience to identify a plausible option, which they considered first. They evaluated this option by mentally simulating it to see if it would work in the situation they were facing… If the course of action they were considering seemed appropriate, they would implement it. If it had shortcomings, they would modify it. If they could not easily modify it, they would turn to the next most plausible option and run through the same procedure until an acceptable course of action was found.”

Klein called this strategy “recognition-primed decision-making”. The commanders were able to draw on their tacit knowledge – their intuitive ability to recognise conditions and compare them to their past experience. This is far quicker than weighing up all of the potential options – something that isn’t feasible in the confused and hurried state – but, with the benefit of their experience, is actually more effective.

This combines the best of both worlds. The amateurs predicting the outcomes of tennis matches were guessing based on a huge array of subconsciously internalised data, but they remained amateurs. Firefighters perform a similar process, trusting that their subconscious has surfaced the right solution. But they do so even more effectively, for two reasons: first, they have a much better store of patterns against which to match scenarios, borne out of long experience; and second, they have a strong ability to play out scenarios in their head, rejecting ones that don’t work. Their gut provides a start point, but they’re happy to move past it if they don’t think it will work.

So: heuristics and intuition can be faster, more efficient, and more accurate than more complex and more involved conscious processes. But that’s not always the case. Less isn’t always more; our brains are clearly subject to biases that impede the quality of our decision-making. So when is intuition able to help?

Klein and Kahneman, writing together, suggest that heuristics are strong in situations that have three qualities:

-

High predictability – the domain has outcomes that are capable of being predicted. A medical diagnosis or a fire has this quality; the stock market or climate change does not.

-

High expertise – the presence of an expert with a high level of relevant experience. Experts can trust their guts; amateurs probably can’t.

-

High feedback – lots of good quality feedback to validate decision-making. Events on long timescales, or events that are rare, are less likely to have these feedback loops.

Clearly, it’s still of great worth to flush out the situations in which our intuition leads us astray. But we should keep in mind that, particularly in scenarios like the above, our instinctive response has much to offer too. Human beings will never be perfectly rational, because objective rationality doesn’t exist. That’s not a failing, though; our heuristics have brought us far on our fast and frugal evolutionary journey, and can take us further still.

Further reading

On “fast and frugal” heuristics:

Gerd Gigerenzer, Peter M. Todd, and the ABC Research Group. “Simple Heuristics That Make Us Smart”. Oxford University Press, 2000

Gerd Gigerenzer. “Why Heuristics Work”. Perspectives on Psychological Science 3(1), 1 January 2008

Gerd Gigerenzer, Daniel Goldstein and Ulrich Hoffrage. “Fast and Frugal Heuristics Are Plausible Models of Cognition”. Psychological Review 115(1):230–239, January 2008

Gerd Gigerenzer and Daniel Goldstein. “The recognition heuristic: A decade of research”. Judgment and Decision Making 6(10):100–121, January 2011

Gary Klein, Robert Calderwood and Anne Clinton-Cirocco. “Rapid Decision Making on the Fire Ground”. US Army Research Institute for the Behavioral and Social Sciences, June 1988

Beth Crandall and Karen Getchell-Reiter. “Critical decision method: A technique for eliciting concrete assessment indicators from the intuition of NICU nurses”. Advances in Nursing Science 16(1):42–51, 1993

Leo Breiman, Jerome Friedman, Charles Stone and Richard Olshen. “Classification and Regression Trees”. Chapman and Hall, 1984

On the divide between naturalistic decision-making and cognitive biases:

Daniel Kahneman and Gary Klein. “Conditions for intuitive expertise: A failure to disagree”. American Psychologist 64(6):515–526, 2009

Books:

John Kay and Mervyn King. “Radical Uncertainty”. The Bridge Street Press, 2020

Gary Klein. “Sources of Power: How People Make Decisions”. MIT Press, 20th Anniversary Edition, 2017

Add a comment